2019 Prediction: Post-mobile, Edge Strikes Back (1)

The future is on the edge. 5G is not element that shifts processing from the edge to the cloud, but rather infrastructure for machine distributed cooperation of edge devices. Tech Giant is expanding from large cloud to edge.

Read second harf 2019 Prediction: Post-Mobile, Strike Back Of Edge(2)

Summary

The economy that mobile phones generated in rich countries is saturating. The future of mobile is in "the other half of the world" not connected to the Internet. In these emerging countries there is opportunity to build digital economy that leapfrog situation of rich countries such as China achieved. Mobile still has important impact on the world economy.

We are facing with limit of performance improvement of von Neumann type computer. Computer architecture and software turn to diversification. The most typical area is machine learning. Machine learning plays very important role in edge computing. Machines autonomously making decisions will help with all kinds of human work.

The future is on the edge. 5G is not element that shifts processing from the edge to the cloud, but rather infrastructure for machine distributed cooperation of edge devices. Tech Giant is expanding from large cloud to edge. Inexpensive machine learning like water supply is required, in order to process large scale data that is generated in large quantities of devices at the edge.

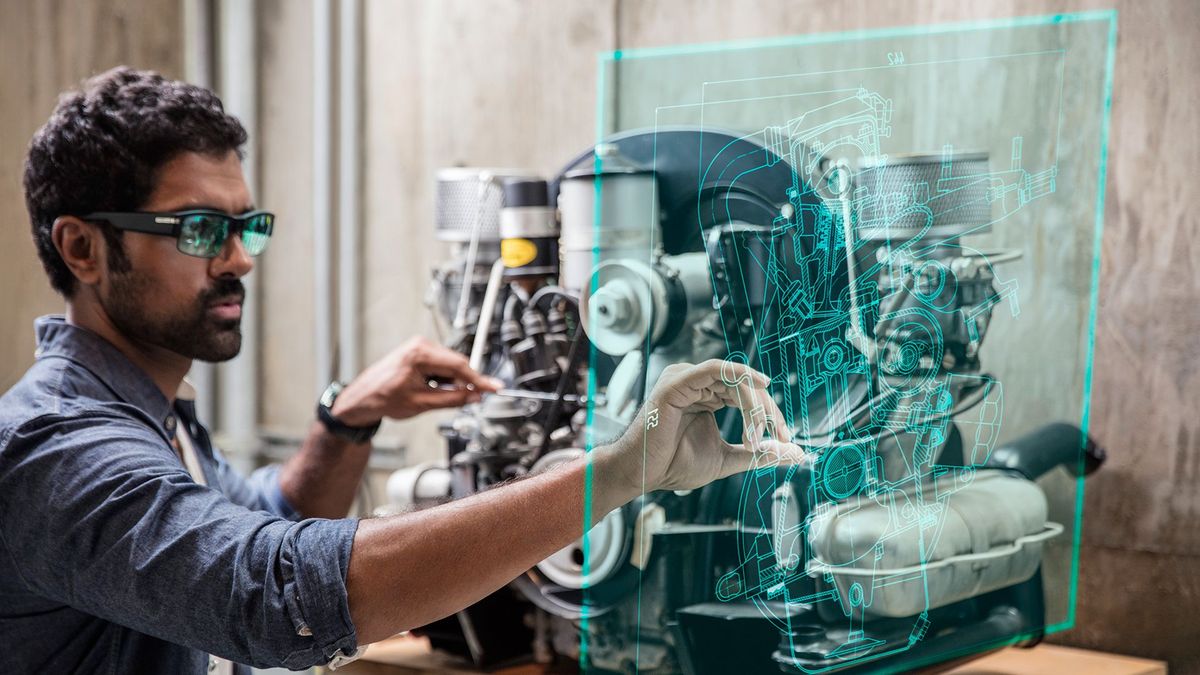

Human computer interface (HCI) continued to concentrate on the screen, but, it will spread to AR / VR / MR and voice.

These changes will have a tremendous impact on our economy: very exciting

Target Reader

I wrote this article for the purpose of exploring business trends post mobile. The target audience is assumed to be person working for tech industry, media advertising industry, consulting firm and financial industry, entrepreneur, investor, person who finds jobs or going to work in peripheral industry in near future. I would like business person to read this article particularly. It contains technical explanation, but it is possible for you to take essence while skipping it. The era of "there is no need to understand technical aspects on the business side" is over.

I generally wrote this article on a smartphone. I got bored with the smartphone . I am looking for new value of computing. Let's progress to the next world with us.

0. Post Mobile

Peter Thiel talks about mobile in an interview with NewYork Times. “We know what a smartphone looks like and does. It's not the fault of Tim Cook, but it's not an area where there will be any more innovation”

According to internet measurement firm Comscore ["Global Digital Future in Focus 2018"](https://www.comscore.com/Insights/Presentations-and- Whitepapers/2018/Global-Digital-Future-in-Focus-2018-Canada-Edition ), The trends of Internet users globally are as follows.

- Smartphone occupy 61.9% of digital time spent. "mobile”(smartphone and tablet) occupy more than 70%.

- Mobile users spend more than twice as much time as desktop users

- Mobile users consume more than 2x minutes vs. desktop users

- Apps Account for Over 80% of Mobile Time

- In rich countries, multi-platform usage that uses desktop and mobile at the same time is majority, but mobile-only Internet users are increasing globally. Mobile-only percentage tends to be dominative in emerging countries.

Facebook, Thiel invested and worked for as a director, is appropriate example to explain this trend. Formar desktop website for Harvard student concentrated development resources on mobile as the trend emerged. Now, most of Facebook's traffic is from mobile apps. According to 2018 Q2 earning call, more than 90% of its advertising revenue derived from mobile. DAU(Daily Active Users is 1,471 million, of which 1 billion is from Asia Pacific and others where people use internet with mobile mainly.

Mobile does not die like French monarchy. Mobile trend has two frontiers. One is the unconnected and the other is extraordinary evolution itself.

Cheap phone gives Connectivity to people who do not have the Internet. Rich countries with mobile as background (given conditions) will enter the season of diversification and tremendous evolution of computing. In addition, in rich countries, there will also be sophistication of computer networks and human-machine interface (HMI). You ought to be very excited, right?

1. The Unconnected

There are many people all over the world who do not have mobile. In other words, there are still many people who are not Internet users. Mobile is good “gateway drag” for the unconnected.

The number of global Internet users reached 3.6 billion in 2017, accounting for 49% of the world population. 50% hasn’t been “enlightened”. However, the growth rate of Internet users on has slowed down, with 12% growth(year on year) in 2016 and only 7% in 2017. After accomplishing a half oh the world population, the growth rate is expected to slow down more than before(Kleinerperkins ["Internet Trends Report 2018"] (https://www.kleinerperkins.com/perspectives/internet-trends-report-2018)) .

There are several tasks. The spread of smartphones is inseparable from connectivity supply(Internet connectivity). If the wireless network (wireless network) is not widespread, smartphones are just “phone packed with transistors". Smart, clean and consistent government leadership is needed for stable connectivity and access to cloud computing, but emerging economies often have difficulties in having a functional government. Even a $ 100 smartphone and tens of dollars of communication cost is too expensive for the low incomes of developing countries.

"Gateway drag" should be cheaper. It's not a headset that costs around 50,000 yen, not an expensive iPhone that the seller earns nearly 50% of profits, but "cheap phone". In emerging countries cheap phone is "the future of the Internet".

Indian economy growing steadily is a typical indicator. According to [IDC India](https://www.idc.com/getdoc.jsp?containerId=pr 44210918), the smartphone company shipped 33.5 million units in India in 2018 Q2. The number of units grew by 20% compared to the same period last year. In the market with an average selling price (ASP) of around 150 dollars, Chinese crowds such as Xiaomi, Vivo, Oppo have tough competition.

Supply of connectivity is also progressing in India. According to telecomlead.com, in 2017 , wireless network subscribers reached 424.67 million, accounting for 95% of Internet subscribers in India. Since India's largest konzern Reliance has entered telecommunications carriers, 4G users are rapidly increasing, reaching 238 million. The speed of 4G is only [one third of the world average , but It is certain that India is eager to catch up rich countries. In the application layer, advanced services based on the mobile platform are challenging based on the funds of the West, China and Softbank. Factor like macroeconomic tables like per capita GDP and urbanization that make facilitation of wireless networks easier and optimize resource allocation, will affect the development mobile Internet in India.

Even in India where governance is unstable, supply of mobile phones and connectivity has been successful. A similar scenario can be expected in the Middle East and Africa.

As China is took as precedent that experienced miracle development, it is reasonable to think that the ASP of the smartphone will rise in proportion to the economic situation of the country. A few years ago in China, major manufacturers such as Huawei and Xiaomi produced and sold mainly cheap prices phones, but they have shifted to medium to high price range recently.

India's problem is not to have Shenzhen, electronics manufacturing basement. The majority of electronics are imported from China, and most of smartphones come from

Shenzhen.

Knowledge on hardware and software does not accumulate in the domestic market. India might become simple export destination. This challenge may possibly be take over by Middle East and Africa countries.

1.1 China keeps to be the world factory

China is a world factory in electronics, smartphone production is unlikely to be transferred abroad. Chinese enterprises not only dominate manufacturing but also catch up North American Tech companies on technology development.

Huawei's growth is phenomenal. It is like rise of Japanese electronics in the 1980s (most of Japanese electronics has lose competitiveness in late 2010s) Smartphone ”HUAWEI P20 Pro" is a masterpiece showing camera performance and its machine power of self design SoC (system on chip). Huawei has obtained so many patents very quickly in semiconductors and can design its own AI chip.

Huawei 's new SoC, Kirin 980 competes with the iPhone's A12 Bionic on 'First 7 nm process in history”(both chips fabricated by TSMC). The number of transistors of Kirin 980 is expected to be the same as 6.9 billion and A12 Bionic. The company shows comparative data with Qualcomm's Snapdragon 845, claiming that Kirin 980 is 37% better in performance and 32% better in power efficiency than Snapdragon 845 ([Reference: PC Watch](https: // pc.watch.impress.co.jp/docs/news/event/1141126.html)).

However, the current Kirin 970 loses to Snapdragon 845. The single processor benchmark of Snapdragon 855 was leaked to Geekbench and it was a fairly wonderful score (Source: Geekbench). Galaxy S10 with Snapdragon 855 will be released in 2019, when Kirin 980, A 12 Bionic, Snapdragon 855 will compete each other. According to comparison of Geek-bench, A12 keeps some leads in CPU performance comparison on single processor. However, the industry will have unique era in the history of smartphone chip when powers of three players balance completely.

It is important for Huawei to compete with Apple, Intel, Qualcomm in high-end SoC. The future is just around the corner where development and manufacture of high-end mobile phone is completed in China without crossing the Pacific Ocean.

In addition, Taiwan's TSMC is extremely important for chip production. It is expected that only TSMC in Taiwan will be able to produce the aforementioned "first-ever" 7 nm process in 2018. TSMC claims to manufacture 5 nm in 2019. This means that TSMC have proprietary advanced technology and huge investment required to realize this. As electronics assembler giant Foxconn is Taiwanese, Taiwan is a major investor to Shenzhen, Pearl River Delta and Yangtze Delta. Cohesiveness in electronics around Taiwan and Shenzhen is extremely worthy. it is doubtful that other players will be able to show competitiveness to the area in the near future.

1.2 Independent of Chinese platform

Huawei is said to be developing its own OS. Currently, Android and iOS have 99.9% of the smartphone OS market. It is Duopoly.

According to South China Sea Post, It is said that they began to develop their own OS when US authorities conducted investigation into Huawei and ZTE in 2012 with “allegation of national security issue“. Huawei founder Ren Zhengfei said at the time that the company should have its own OS to prepare for the worst situation. The US of the Trump regime puts pressure on Huawei and ZTE again this summer (ZTE got temporarily import banned, the authorities keep surveillance to ZTE thereafter). The paper estimates that Secret OS development became very important again. On the contrary, the company's executives explain officially that they do not need to develop own mobile OS and Android was "totally acceptable”.

CCS Insight, which makes interesting annual forecasts, [predicted](https: // www. theregister.co.uk/2018/10/04/ccs_insight_tech_predictions/) that China's proprietary OS adoption is probably likely by 2022 and that will cause "fragmentation of technology control by the United States". CCS believes that China is taking the initiative in 5G and acquiring the mind share of its services throughout the world.

"The current political tension between China and the US and ensuing troubles for ZTE and Huawei present a strong incentive for other Chinese companies to create their own operating system for smart devices. Spurred by a desire to quickly reduce their dependence on US companies, Chinese technology players use the replacement of 4G smartphones with 5G-ready devices to advance the transition to a home-grown platform."

Huawei currently designs devices and SoC, and some applications. In addition, it is natural for the firm to consider OS design. But Android is open source. It is reasonable to ride on Google, which is easy to spend on abundant development resources into OS. Android users outside China heavily depends on applications such as YouTube, Google, etc. The cost of making a distinction between Android and its own OS like Windows Mobile is high. Huawei is electronics manufacturer giant Google can not ignore, but the conditions of its own OS are not in place.

CCS also revealed that Tencent and Alibaba became more important in Western Europe and emerging markets. Regrettably, they are liked by authoritarian governments (of many emerging countries) to do "scoring citizens". Ubiquitous surveillance allows states to monitor the extent of individual activities and political opponents suffer and face economic challenges when applying for credit, work or travel.

1.3 Cutting Edge of Tech Economy

What will we bring to the world where gateway drag is not connected yet? That is Tech Economy. China is a typical example of the mobile first penetration that brought about a new economy. Even though consumers in developed countries have preconceptions or people still hanging on the screen of the portal site, there is a mobile-based net experience where there was nothing in China, and the convenience of the unknown users was pursued It was. In commerce and finance, China is building up a business that far surpasses Western and European days. Regarding the contrast between wealthy countries and emerging countries in finance, in Japan I will post these articles earlier (["Digital revolution in Asia is happening in Asia: Beyond developed countries"](https://digiday.jp/platforms / asian-digital-revolution /)), so let's advertise here. A few years after this article trying to arrange the regulation Japan is pretty masculine.

What will the gateway drag bring to the unconnected world? That is fantastic: ”Tech economy". China is typical example where mobile first spread Internet has brought new economy. Consumers in wealthy countries have prejudices about various digital services. There are outdated people still viewing portal site. Suddenly the mobile Internet entered where there was nothing in China, and convenience for user was thoroughly pursued. In the commerce and finance field, China has created digital business that far surpasses rich countries, the predecessor. Regarding the comparison between wealthy countries and emerging countries in finance, I mentioned this article a few years ago(["Digital payment revolution is occurring in Asia: Medium India beyond developed countries"](https : //digiday.jp/platforms/asian- digital - revolution /)). A few years after this article, Japan is finally trying to regulate it.

As scale of Chinese market increased, large-scale social implementation of digital business model has rapidly progressed. The scale of Internet user in China encourage players to continue experiments and enable digital players to quickly achieve "economies of scale". As of August 2018, China has 800 million people Internet users, which is the combined scale of the European Union and the United States.

The enthusiasm for digital tools widely shared by Chinese consumers supports growth, and promotes the rapid introduction of innovation, and makes China's digital player and its business model very competitive. According to McKinsey's "China's digital economy: A leading global force", Nearly one out of five Internet users depend on only mobile phones (“mobile only”), compared to only 5% in the United States. The proportion of Internet users using mobile digital payment is about 68% in China. It is only about 15%, In the United States.

China's three largest net giants have built a rich ecosystem that extends beyond them. Baidu, Alibaba, and Tencent, also known as BAT, are building a solid position in the Internet world and also dispatching poor quality and inefficient 'offline market' at the same time. BAT companies have developed multifaceted and multi-industry digital ecosystem that touches all aspects of consumer life. In 2016 BAT provided 42% of the total investment by venture capital in China and played a much more important role than Amazon, Facebook, Google and Netflix, which was only 5% of US venture capital investment (Source: "China's digital economy: A leading global force"). Not only the three major companies in China, but also hardware manufacturers like Huawei, Xiaomi, DJI, Toutiao, Didi and others have also built their own ecosystem. Chinese digital players enjoy the remarkable advantages of close connection with hardware manufacturers. The Pearl River Delta's industrial concentration is likely to continue to be a major producer of net-connected devices due to the strength of hardware manufacturing capacity.

Not only does the Chinese government apply stringent regulations, it also allows digital players to experiment, becoming an active supporter of Tech companies. As the market matures, the government and private sector have become increasingly more aggressive about forming more digital economy development through regulation and enforcement. Today, the government plays an active role in building world class infrastructure to support digitization of investors, developers, consumers.

1.4 Conclusion on "the unconnected"

The trend of mobile is in developing countries, not rich countries in the West. When connectivity spread where there has been no connectivity so far, people begin to enjoy various benefits computer network provides. And there will be new kinds of business that has never been seen and changing the real world's way of life. New Ideas have opportunity to enrich people's lives, giving so creative products with great benefit that could not have been imagined in wealthy countries. China demonstrates it. Next should be followed by India and Africa.

2. Sophistication in rich countries

Well, let's see the other frontier, or progress of mobile internet in rich countries. phones have brought mobility to computing, but in the next era, computing goes beyond mobility and change to ‘It’s in everywhere'. Machine learning is most hopeful future to give computing interesting diversity. Augment reality(AR) will be the optimal form of Human Machine Interface (HMI).

In rich countries, evolution occurs simultaneously in various areas. It is extremely fluctuating and unpredictable when and how much each of distributed element make the world change. A small piece of change has enormous externality to others(hyperconnected). I will give a technical explanation from now. In order to foresee the future of mobile, we have to expand the scope and focus on the future of computing.

2.1 Post Moore

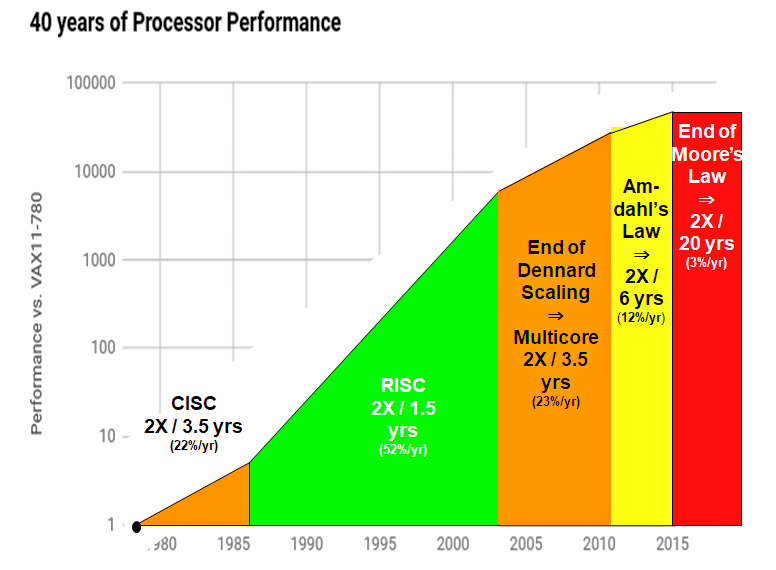

You can think that the rule of chip miniaturization has already reached "the end of Moore's Law". The era when miniaturization reduces processing speed, power consumption, cost has already ended. In recent years, the chip industry has promoted miniaturization and multicore while accepting cost. They are trying to cross the physical accumulation barrier by making the chip three-dimensional structure.

Intel co-founder Gordon Moore predicted that the number of transistors per chip doubled in one to two years in 1965 (the period was then corrected from "18 months to 24 months"). This law is the reason for the existence of Intel and it was one of the driving forces of overwhelming evolution of modern society. But the law is over. DRAM chips in 2014 had 8 billion transistors, but DRAM chips with 16 billion transistors are not mass-produced until 2019. In Moore's Law, DRAM chips with 32 billion chips must be developed in 2019, though. Intel Xeon E5 in 2010 microprocessor has 2.3 billion transistors and Xeon E5 in 2016 has 7.2 billion transistors. This also goes against the law. Semiconductor manufacturing technology is improving more than the past, but its speed of performance improvement is getting slower.

Less known but important is Denard Scaling. Robert Dennard 's insight in 1974 generally means that miniaturization progress guarantee that processing speed increases, more complex circuits can be integrated in the same size of silicone, and power consumption is reduced.

Micronization is a magical law promising performance improvement. The Denard's rule ended in the mid-2000s after thirty years since the first observation. The merit of miniaturization is reduced, and semiconductor companies considered to save Moore's Law by multicore performance improvement from single-processor performance improvement. However, this multi-core performance improvement also has "Amdahl's law" constraint.

The industry expresses economic benefits in a form like a system-on-chip, borrowing the power of software improvement, providing detailed optimization such as instruction set simplification, out-of-order execution and speculative execution etc. The industry and academia are doing everything they can do, but in the term of “exploration and exploitation" in machine learning, return of exploitation saturate significantly. David Patterson, a professor at the University of California at Berkeley, points out that processor performance improvement "doubles" in 20 years ".

Miniaturization creates both economic and physical restrictions. Foundries capable of manufacturing 7 nm process are limited to 1 and 2 companies, and capital investment of over 1 billion dollars will be required to construct the fab line. The development of miniaturization technology is very difficult. It is required to change manufacturing method and material whenever the process is miniaturized. Period spent to develop the next generation has been stretched recently. At the same time, chip development has not necessarily guaranteed commercial success to recover investment, so the risk taken by foundries (semiconductor manufacturers) is rising.

There are economic restrictions that miniaturization is deteriorating cost efficiency and physical restrictions that there is a limit to miniaturization of chips. John Hennessy and David Patterson declare "the end of Moore's Law" and propose the introduction of a specialized architecture and programming language for a specific purpose. In recent years there are also many rhetoric that modernity is the "Cambrian" of computer architecture. It is a time when the earth is covered with the interface and the living thing is explosively diversified, it is not the design of a von Neumann type of general computer, but the time when the architecture corresponding to that purpose is designed.

There are economic restrictions that miniaturization is deteriorating cost efficiency and physical restrictions that there is a limit to miniaturization of chips. John Hennessy and David Patterson declare "the end of Moore's Law" and propose the introduction of architecture and software specialized for a specific purpose. In recent years there are also many rhetoric that modernity is the "Cambrian" of computer architecture. It is a time when the earth is covered with the interface and the living thing is explosively diversified. Now, we need not only general computer architecture designed by von Neumann, but also the architecture corresponding to specific purpose.

This is shocking the industry. New iPhones and MacBooks released by Apple in 2018 have become unable to express dramatic performance improvement. The Intel CPU on which the Macbook is loaded is unlikely to unnecessarily increase the number of cores to counter AMD and it gets hot. Performance is good when used after refrigerating in the refrigerator. Evolution of hardware does not express evolution of your experience. This problem is solved by the evolution of the cloud, and the computer architecture itself has many different evolution options. Let's dig down the latter first. The end of Moore's Law is a major turning point.

The latest version of John Hennessy and David Patterson 's greatest book "Computer Architecture, Sixth Edition: A Quantitative Approach" has the chapter "Domain Specific Architectures" domain specific architecture "added. Google's TPU (Tensor processing unit) and Microsoft's FPGA(field-programmable gate array) are listed as examples of Domain Specific Architectures (DSAs). Hennessy became chairman of Google parent company Alphabet, Patterson has worked on the development of TPU with Google engineers. Obviously something new happens in the company.

In order to learn about chips and TPU specialized in machine learning, we first have to explain GPU (Graphic processing unit).

The CPU focus on general purpose processing, but GPU is specialized in image processing. The difference between GPU and CPU is in parallel processing. CPU consist of several cores optimized for sequential processing. While, the GPU is made up of thousands of smaller, more efficient cores designed to accommodate simultaneous multiple tasks. After receiving the drawing information from the CPU, the GPU immediately executes the procedure of vertex processing, reference of texture, pixel processing including lighting calculation on a pixel basis, and output it to the screen. Vertex processing and pixel processing have characteristics of high parallelism.

The term "GPU" has been widespread by NVIDIA. In 1999 NVIDIA released GeForce 256 under the name of "the world's first GPU" and sold it. In fact, RCA Studio II in 1976 and Atari 2600 in 1977 involved "video chip" and this is considered the first image processing specialized chip. With the expansion of use of CG in gaming and movies, the GPU has expanded market. After that, the GPU dramatically improved performance, but the market did not keep up with it. Only 3D games were able to run out their performance.

The expansion from image processing to general purpose use has occurred in 2007. When the GPGPU development environment CUDA was opened to the public in 2007, many users became interested in CUDA as a new parallel computing platform which can obtain high computing performance at low cost, and the research of GPGPU gained wide attention . CUDA is programming environment that allows user access NVIDIA GPU in the lowest layer. AMD also launched development environment consisting of ATI Stream SDK (AMD Stream SDK) and Brook+ in 2008. GPU performance improves at a speed exceeding CPU. In response to the request of HPC (high performance computing), not only graphics but also GPGPU-aware performance is developed. NVIDIA invested heavily in GPGPU, overwhelming AMD.

There was an amazing by-product in GPGPU. That is machine learning. GPU is used for parallel calculation of CNN (convolution neural network). In the late 2000s, game-changing discoveries appeared, including Geoff Hinton's back propagation and Stochastic gradient descent, Yang Lucan's convolution neural network. “Artificial intelligence" is booming, that has experienced long miserable winter twice.

Andrew Ng of Stanford University and NVIDIA began to work on applying GPU to machine learning in around 2007 when NVIDIA released CUDA. Ng demonstrated GPU acceleration of CNN training at famous ”Google's cat" whose model distinguishes cats. At the 2012 convention of ImageNet, Alex Krizhevsky of the University of Toronto conduct large-scale GPU training, showing distinctive performance compared with traditional computer vision experts.

Enthusiasm for machine learning has increased since then, and demand for GPU is increasing. There is an episode that describe how insane the enthusiasm is. Deadline for papers for Neural Information Processing Systems (NIPS), a top machine learning conference held in December 2016, was May 19th. The research teams around the world used the cloud GPU all at once, so GPU resources of Google Cloud and Microsoft Azure were temporarily depleted (The Registar).

Success in machine learning area has rapidly raised the stock price. Nikkei BP, Japanese business pubsiher, described “mysterious AI semiconductor maker Toyota relied on," as NVIDIA, but market capitalization of NVIDIA is getting closer to Toyota. NVIDIA monopolized chips for machine learning, scaring Tech Giant, the biggest user of it.

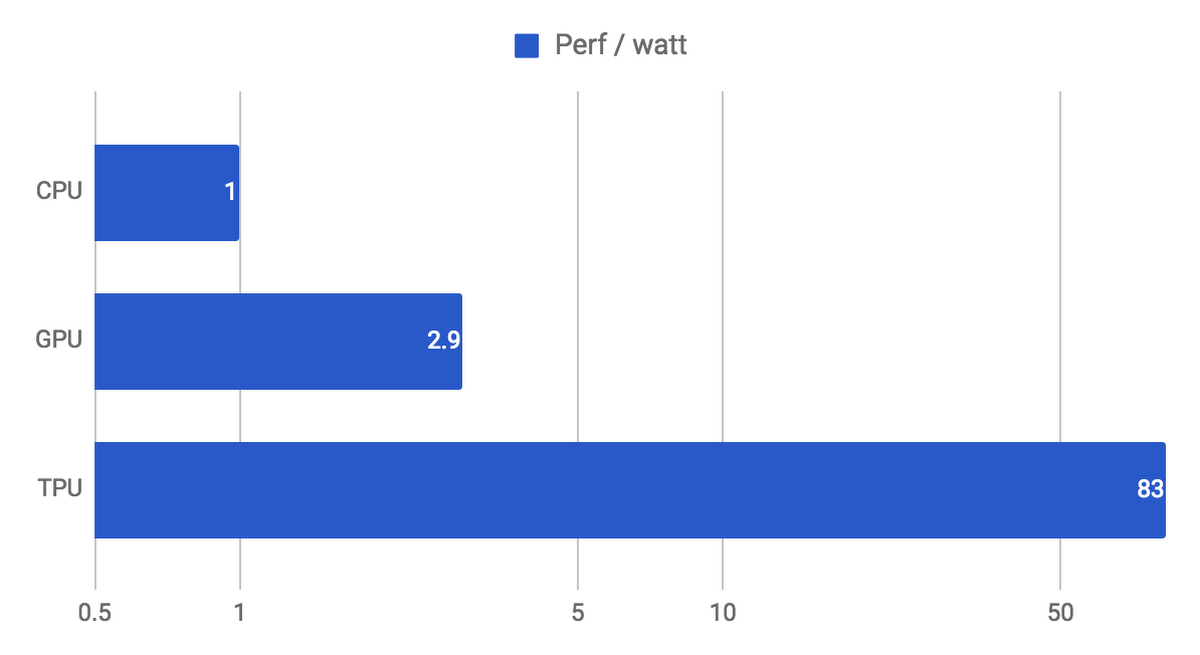

2.3 Google TPU

At the end of Moore's Law, Domain Specific Architectures (DSAs) is the future of "computing". Google's TPU (Tensor Processing Unit) was first introduced in 2015. Google's cloud computing using TPU serve more than 1 billion people. It is described as "30 to 80 times better energy efficiency than modern CPUs and GPUs, which will perform deep neural network (DNN) reasoning 15 to 30 times faster".

Google considered leveraging GPU, FPGA, ASIC (Application Specific Integrated Circuit) to the data center for the first time in 2006. Applications that can run on special hardware, can run almost free of charge using excess capacity of Google's large data center at that time, but it was difficult to improve performance without additional expense. In other words, although it is possible to respond to the current situation, data center could not cope with the expansion of computing demand.

However, in 2013, when Google users searched by voice three minutes a day using DNN of speech recognition, Google found that computation demand of data center doubled. This is very expensive when using conventional CPU. Thus Google launched a high priority project to quickly develop custom chips for reasoning. The goal was to improve cost performance by 10 times. The TPU was designed, verified, built and deployed in Google's data center in just 15 months. Development of chip usually take around 3 years(Communications od the ACM "A Domain-Specific Architecture for Deep Neural Networks”).

2.4 Technical description of TPU

Matrix multiply unit that provides high throughput, specialized for large matrix multiplication application, and large software controlled on-chip memory. GPU is specialized for vector operation, while TPU is optimized for inference and learning of neural network (NN) by specializing on matrix multiplication.

Matrix Processor (MXU) architecture uses 65,536 8-bit integer multipliers to push data one after the other through systolic array architecture. TPU employs "Complex Instruction Set Computing (CISC) Architecture" that can trigger multiple low-level processes using a single high-level single-threaded instruction.

It is totally specialized in NN inference and learning, and explained that it is superior to CPU and GPU in utilization for machine learning.

- In AI workloads on inference using neural network, TPU perform 15 to 30 times faster than current GPU and CPU.

- The energy efficiency of the TPU is much higher than conventional chips, showing 30 to 80 times improvement.

- The amount of code in the neural network that supports these applications is surprisingly small, only 100 lines to 1,500 lines. This code is based on TensorFlow which is a popular open source machine learning framework developed by Google.

Google points out that GPU power consumption at the data center was a bottleneck. The criteria for data center hardware investment should be at Total Cost of Ownership (TCO). It refers to the total cost including not only the purchase cost but also the cost of continuing to use when holding a machine.

John Henessey points out that power efficiency of processors is critical in data centers. The electricity cost affects total cost heavily, and is more important than purchase cost of processing units. TPU team discloses cost of electricity correlated with TCO, although they did not disclose TCO for business reason.

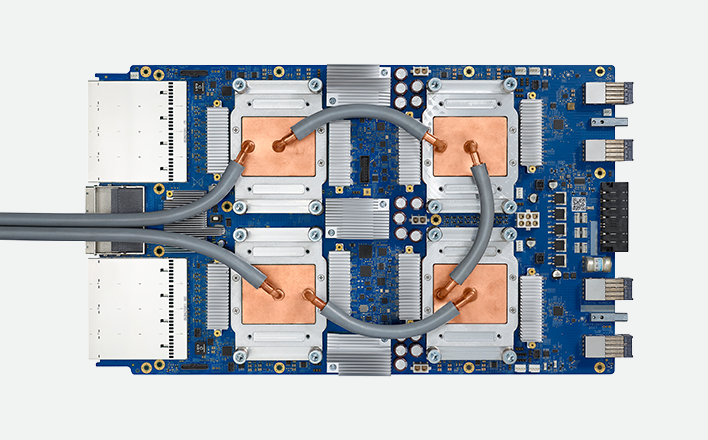

TPU v1 (2015) corresponded to inference only, TPU v2 (2017) also corresponded to inference and training. Google argues that the pod made up of TPU v3 announced in May this year is eight times faster than the previous generation pod. TPU v3 adopts water cooling for the first time. Cooling with coolant has overwhelmingly large heat capacity, and generally has higher cooling performance than air cooling.

Google is promoting machine learning specialization not only in hardware but also in software. It is TensorFlow. TensorFlow has its roots in closed source deep learning system "Google Distribute" developed by the Google Brain project. Google designed TensorFlow from scratch for distributed processing and made it work optimally with TPU in Google's data center. TensorFlow is designed to work effectively for deep learning applications. Google does not necessarily aim for vertical integration of Cloud TPU and TensorFlow. TensorFlow is open source, and it supports ordinary CPU and NVIDIA GPU.

2.5 MS Catapult FPGA

Microsoft also make similar strong investment in the field of machine learning chips: it’s FPGA. FPGA is general purpose LSI which can process a specific process at much higher speed than general purpose microprocessor by rewriting the logic inside the chip. Microsoft is trying to provide computing resources for machine learning with FPGA. FPGA can be reconfigured for various types of machine learning models. This "flexibility" enables machine learning acceleration based on optimum numerical precision and memory model.

Project Catapult is project to apply large scale FPGA to Microsoft's cloud. In 2010, Microsoft Research NExT resercher Doug Burger and Derek Chiou began with search for GPU, FPGA, ASIC as "Post CPU" technology. Microsoft decided that adoption of FPGA can realize efficiency and performance without cost, complexity, and risk of developing custom ASIC.

According to Doug Burger,

in the second half of 2015, Catapult FPGA board was on almost all new servers purchased by Microsoft. It was used for Bing, Azure and others. After that it has expanded its scale and began to be deployed all over the world, and Microsoft says that the company uses the most FPGAs on the planet.

This FPGA is manufactured by Intel. In Project Catapult, MS call the part specialized in machine learning as "" Project Brainwave ", It enables us to achieve low latency against the demand for inference. Project Brainwave provides computing power only for inference and does not take care of training. Brainwave's FPGA is designed to process live data streams such as video, sensors, search queries, and so on to deliver data to users quickly.

Microsoft emphasizes processing at the edge and IoT capability. "We are considering 1.5 billion Windows terminals in the world as one edge device, and Windows will officially introduce a runtime environment to leverage machine learning to Windows.”

In April 2018, Microsoft released "Azure Sphere” . It is software and hardware stack for edge devices that achieves "Intelliginence Edge". Azure Sphere consists of a built-in microcontroller unit (MCU), custom OS built for IoT security, and cloud security service to protect devices.

Julia White, CVP Microsoft Azure said in the official blog "Microsoft will invest $ 5 billion in IoT", citing A.T. Kearney's forecast, IoT will bring about 1.9 trillion dollars worth of productivity improvement and cost cuts of $ 177 billion worth by 2020.

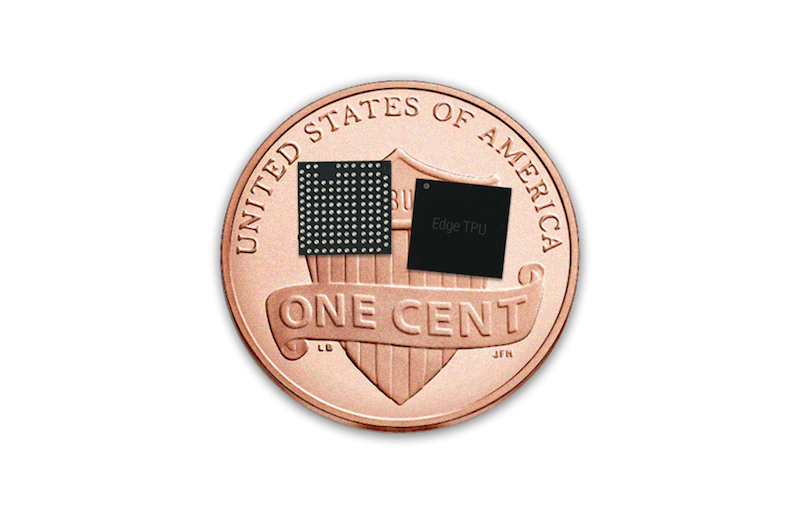

Google also announces that it will sell Edge TPU outside its datacenter. Edge TPU is intended for IoT devices, gateways, edge computing devices, etc. It is provided to customers in the form of SOM (System on Module). The SOM is equipped with SoC with quad-core Arm CPU and GPU, Edge TPU, Wi-Fi, secure element of microchip.

Edge TPU was designed to run the machine learning model of TensorFlow Lite on the edge. We are concentrating to optimize 'performance per watt' and 'performance per dollar.' Edge TPU is designed to complement Cloud TPU. Google accelerates ML training in the cloud, realizing ML inference at the edge. Sensors is going to be more than data collectors, making locally real-time intelligent decisions.

Cloud IoT Edge is software that extends the data processing and machine learning functions of Google Cloud to gateways, cameras, and end devices, making IoT applications smarter, safer and more reliable. Both of Google and Microsoft are trying to provide machine learning functions that increase importance by extending the computing capability of clouds to the edge, in terms of hardware and software.

Why do they develop chips for machine learning and extend resources from the cloud to the edge? There is buzzword called IoT and edge computing.

Read second harf 2019 Prediction: Post-Mobile, Strike Back Of Edge(2)

Image via Qualcomm